TRAFFIC DETECTION WITH DEEP LEARNING

By Michael Peccia

ABSTRACT

This project aimed to develop a program capable of counting semi-trucks, sedans, and SUVs in each direction from traffic camera feeds in Lake County, Indiana. Utilizing Python, the Ultralytics library, Google Colab's GPU support, and Roboflow for object annotation, a sufficient model was able to be trained. Given the video quality constraints of the cameras, it was infeasible to train a model to distinct the SUVs from sedans, however, the model can easily detect and distinct between trucks and cars. This program has also experimented with vehicle speed detection. While speeds are shown to be in the range of what expected, the research didn't verify any of the results.

INTRODUCTION

The goal of this project is to design and implement a robust software solution capable of detecting and categorizing vehicles captured by camera feeds and count the amount of each in each direction. Why may this be important? First, it can be used for traffic management. Seeing which times of the day traffic flow increases/decreases could be used for traffic light timers. Second, it can be used as an indicator of economic growth in an area. If the rate of trucks entering towards an area is increasing over time, that can indicate economic growth in that area. There are many other ways this data can be used if thinking creatively. Maybe even for pricing billboards?

LANGUAGES/FRAMEWORKS

When it comes to deep learning, working in a Python environment couldn't be an easier choice. This is because there is a vast amount of libraries and frameworks that exist for deep learning. These libraries provide high level abstractions for building and training neural models. This makes it easier to implement complex models with minimal code. A popular framework which will be used in this program is PyTorch. The library we will use for model training and predicting will be Ultralytics (YOLOv8) which is built on top of PyTorch.

COLLECTING AND PREPARING DATASET FOR TRAINING

The first step in this project is collecting the dataset. All the traffic camera footage is sourced from 511in.org. 20 seven minute videos from various cameras were sampled and added to the dataset. Now that the dataset is collected, it needs to be prepared for training. The model needs to understand what it's training for, so the next step was to find a service that provides object annotation. This will allow for the creation of classes in order to distinct between vehicles such as sedans, SUVs, and trucks. The service used for this project was Roboflow. Roboflow sampled about 10 - 20 images from each of the 20 videos. The completed dataset had over 250 images. Immediately into annotating and drawing boxes around all these images, the realization hit that it was infeasible to distinct between the SUVs and sedans. The poor video quality served as a constraint for this. If a human is struggling and failing to identify SUVs and sedans, the deep learning algorithm is no better than the human eyes. With a better quality video feed, this would be feasible but for now, this will result in the program distincting only between cars and trucks. After the completion of annotating, the dataset can now be exported and we can move to training.

TRAINING DATASET

It's now time to train the dataset. In order to save time, we are going to need access to a good GPU. Luckily, GPUs can be accessed on the cloud for free. Google Colab is excellent for this type of job. We can access a Jupyter Notebook and write all the Python code for training inside of it. In the notebook, the Ultralytics library will be imported along with an API to our dataset from Roboflow. Because the product is expected to be used for live traffic feeds, it is optimal to train it to be a faster model. The training was based off the YOLO nano model which sacrifices accuracy for time. Given that we are predicting cars and trucks on traffic cameras, the nano model was perfect and the resulting accuracy was sufficient. The model was trained for 100 epochs, an image size of 1152, and a batch size of 64. Once training finished, the result was a ".pt" file which was the trained model. This was downloaded to the local machine so it can be used for predicting.

MODEL RESULTS

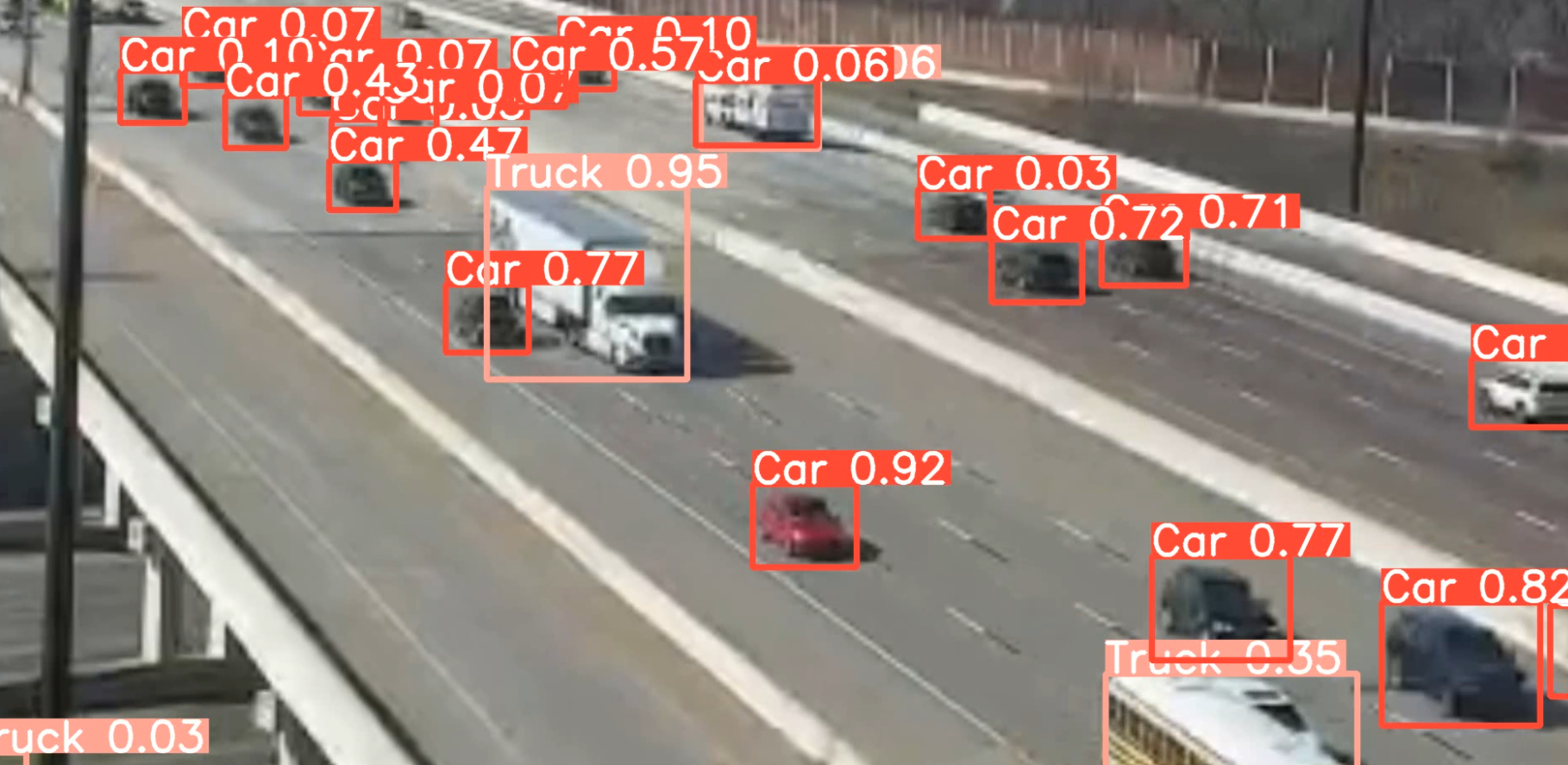

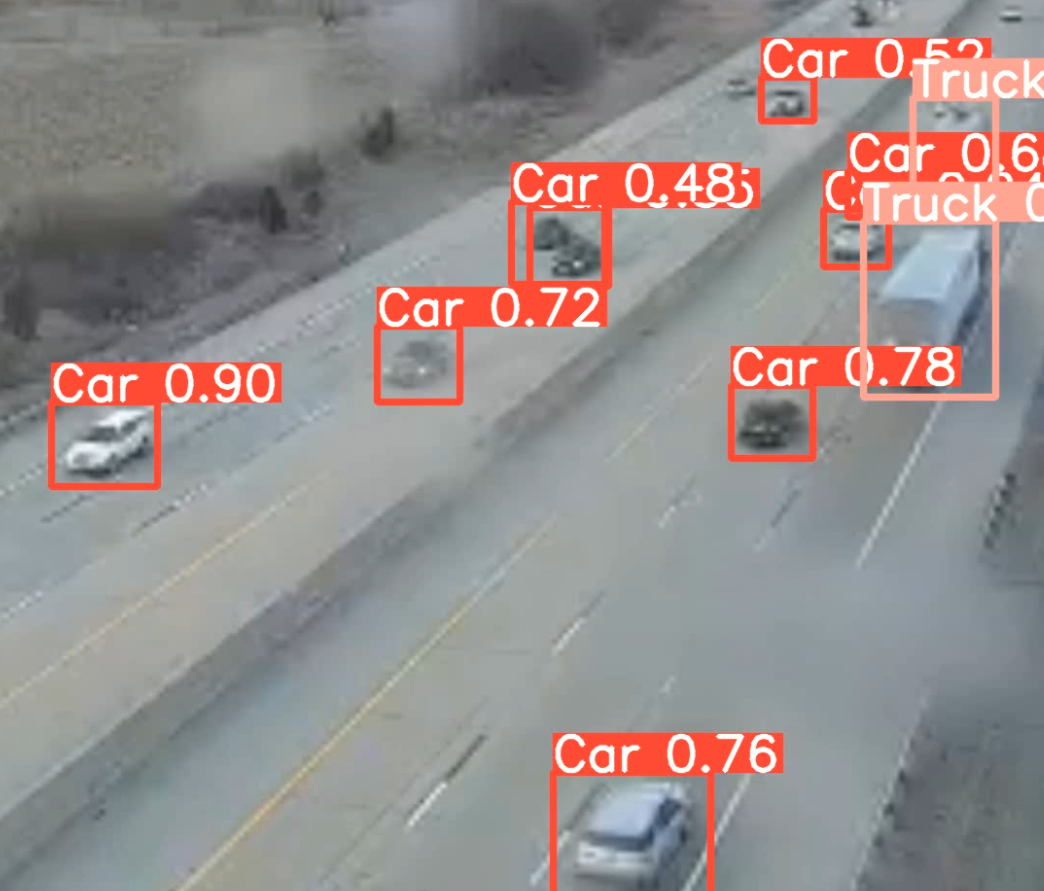

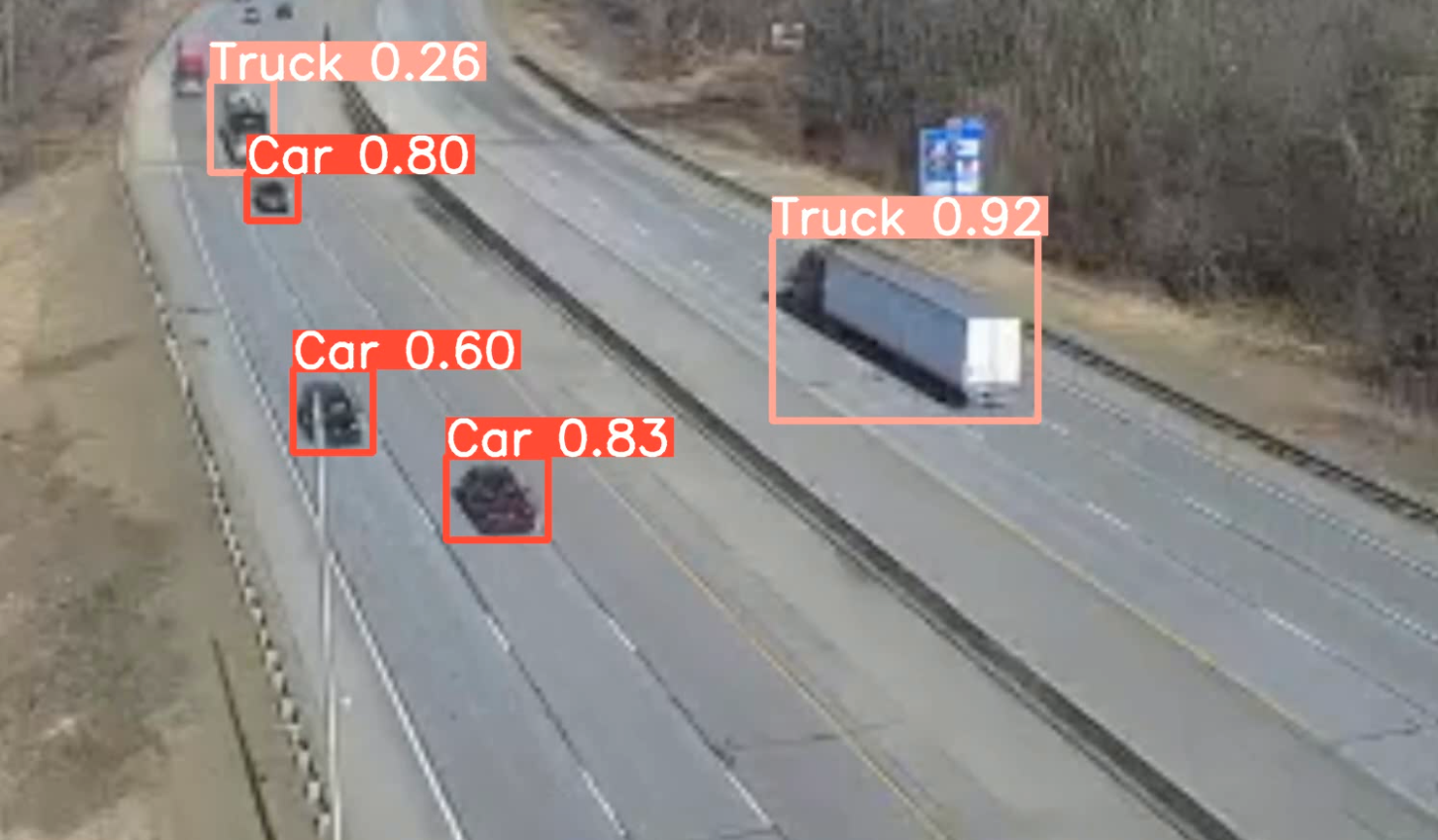

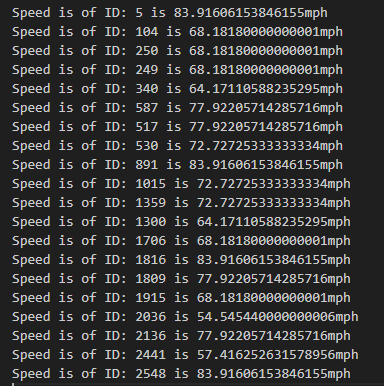

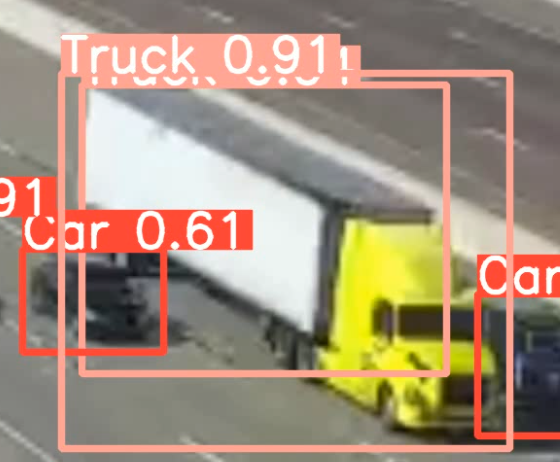

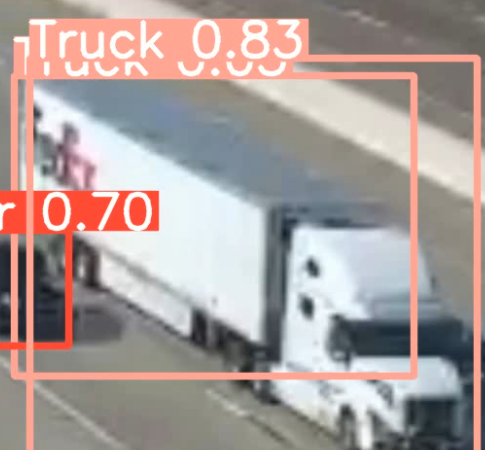

Below are a few images from different cameras that demonstrate the models prediction capabilities.

OBJECT TRACKING

Now that the model can successful predict and detect objects, it's time to track them. Every time a new frame pops up, the model thinks it's detecting new objects. This obviously isn't the case. After researching, I found a someone by the name of FREEDOM TECH on YouTube who wrote code for a tracker. I added the tracker.py file to my program. While there wasn't documentation provided, I was able to figure out how to implement it. Two tracker objects were created, one tracker to track the class of trucks and the other tracker to track the class of cars. Now that the program can recognize individual vehicles apart from each other throughout the duration of a video, it's time to start counting how many of each class are entering and exiting.

ENTERING AND EXITING

The model is now able to track. Next, it's time to set up a region. This can be done by drawing two lines. Depending on the video feed that traffic is being detected on, there can either be a left line and a right line or a top line or a bottom line. It depends on the flow of traffic relative to the camera. In the images below, there is a top line and a bottom line.

If a vehicle hits the top line first and then the bottom line second, it is determined that this vehicle is traveling towards the camera. If it happens vice versa where it hits the bottom line first and then the top line, the vehicle is traveling away from the camera. These two lines are drawn to the frame to the using the opencv2 library in Python. Since each object is tagged with an id because it's being tracked, we can create a counter for everytime these vehicle ids enter and exit the region. In order to trip the counter, the vehicle must be within a margin of 18 pixels of the line. The value can be changed but I find it to be nice leeway for the low fps footage. The vehicle counter is displayed in the top left of the video as seen below.

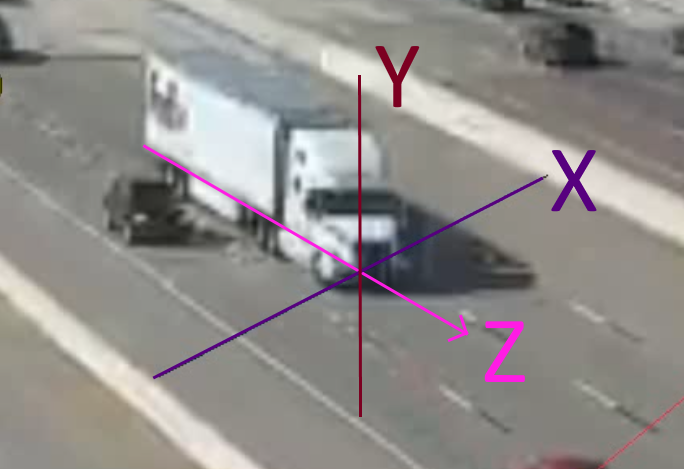

DETECTING SPEED

I was curious if I can get the speeds of the vehicles accurate. This wasn't an objective of the project but I did get something working. In order to get the velocity vector of the vehicle, I need to flag two points that the vehicle hits and see the difference in time it between them. When I recorded the data, I recorded it in 60fps. This means that 60 frames is equivalent to one second. It is very important to note that constant fps is crucial for this model because the time is based off of it. So if the underlying video from the feed has flickering fps or the config isn't set correctly to the stream's fps, the output would be completely inaccurate. This vehicle detection product would only be viable if those factors were sufficiently controlled. I also had to make sure that the lines I used were orthogonal to the velocity vector of the vehicle. I found reference points in the road, being the black and white lines, and I drew lines connecting them. The lines are roughly orthogonal to the vehicles' velocity. Notice that the lines in the left-most image below are not parallel. Don't be tricked, that's simply just because we see in perspective. It's the same as how train tracks looks like the converge despite being parallel. In order to calculate the distance between the points, I went to google and found the the black and white lines are 20 ft long and have 20 ft in between them. This means that below, there is 80 ft of distance between the 2 lines. I was able to calculate the feet per second of every vehicle and translated it all to miles per hour. The speed of each vehicle id is logged. Unfortunately, the results weren't verified but we can see that the speeds shown are realistic which is positive sign if this were to continue development.

One change that was made was the margin of pixels the vehicle had to be within the line in order to be detected. It was lowered from 16 pixels to 4 pixels. In order to get the speed as close to accurate as possible, the vehicle needs to be timed as close to the lines as possible. Unfortunately due to low fps, reducing the margin has results in an increase in vehicle misses because the frame will sometimes skip over the margins. However, if one were to be able to keep increasing the distance between the lines while still successfully detecting vehicles, the error from margin of the line can become more and more insignificant. Ideally, the footage can be a higher quality and higher fps.

DETECTION FAILURES

There is a phenomena that happens in which the trucks are double detected. I noticed that every once in a while, a blatant truck will not be detected. When I went into debugging, I noticed that the tracker ID would screw up because sometimes a truck will be double counted as seen below. This seems to happen about less than 20% of the time and it only happens to trucks coming towards the camera. This issue may be the result of flawed dataset annotation which is the best case scenerio as it would be the easiest to fix. It could also be the result of the use of the nano model to train and this is the inaccuracy coming through. If not, the solution may be a little difficult. Due to time and resource constraints on my end, I am not delving into this because it can be a lengthy fix, but I am sure it can be done.

CONCLUSION

Overall, this project has been a success. The program is able to detect cars with 90% accuracy and trucks nearly every time. However, because there is a bug where one truck can be double detected, it will result in the truck not being counted. This means that trucks are being counted with around 50% accuracy in the facing towards direction. With continued development, I am extremely confident that this bug can be fixed. Unfortunately, this was done on a short time frame and it is unrealistic for a perfect product can be produced. What is important is this research shows where the ceiling is for this type of product and a highly accurate detection model can be built.

REFERENCES

- "Counting Vehicles | Tracking & Counting Using Yolov8 | Yolov8 Object Detection and Object Counting." YouTube, YouTube, 22 Apr. 2023, www.youtube.com/watch?v=DXqdjWndooI.

- docs.ultralytics.com

- https://app.roboflow.com/

- https://colab.research.google.com/